One of the best features of the ZDLRA is the ability to dynamically create a full Keep backup and send it to Cloud (ZFSSA or OCI) for archival storage.

Here is a great article by Oracle Product Manager Marco Calmasini that explains how to use this feature.

In this blog post, I will go through the RACLI steps that you execute, and explain what is happening with each step

The documentation I am started with is the 21.1 administrators Guide which can be here. If you are on a more current release, then you can find the steps in chapter named "Archiving Backups to Cloud".

Deploying the OKV Client Software

To ensure that all the backup pieces are encrypted, you must use OKV (Oracle Key Vault) to manage the encryption keys that are being used by the ZDLRA. Even if you are using TDE for the datafiles, the copy-to-cloud process encrypts ALL backup pieces including the backup of the controlfile, and spfile which aren't already encrypted.

I am not going to go through the detailed steps that are in the documentation to configure OKV, but I will just go through the high level processes.

The most important items to note on this sections are

- Both nodes of the ZDLRA are added as endpoints, and they should have a descriptive name that identifies them, and ties them together.

- A new endpoint group should be created with a descriptive name, and both nodes should be added to the new endpoint group.

- A new virtual wallet is created with a descriptive name, and this needs to both associated with the 2 endpoints, and be the default wallet for the endpoints.

- Both endpoints of the ZDLRA are enrolled through OKV and during the enrollment process a unique enrollment token file is created for each node. It is best to immediately rename the files to identify the endpoint it is associated with using the format <myhost>-okvclient.jar.

- Copy the enrollment token files to the /radump directory on the appropriate host.

NOTE: It is critical that you follow these directions exactly, and that each node has the appropriate enrollment token with the appropriate name before continuing.

#1 Add credential_wallet

racli add credential_wallet

Fri Jan 1 08:56:27 2018: Start: Add Credential Wallet

Enter New Keystore Password: <OKV_endpoint_password>

Confirm New Keystore Password:

Enter New Wallet Password: <ZDLRA_credential_wallet_password>

Confirm New Wallet Password:

Re-Enter New Wallet Password:

Fri Jan 1 08:56:40 2018: End: Add Credential Wallet

The first step to configure the ZDLRA to talk to OKV is to have the ZDLRA create a password protected SEPS wallet file that contains the OKV password.

This step asks for 2 new passwords when executing

- New Keystore Password - This password is the OKV endpoint password. This password is used to communicate with OKV by the database, and can be used with okvutil to interact with OKV directly

- New Wallet Password - This password is used to protect the wallet file itself that will contain the OKV keystore password.

This password file is shared across both nodes.

Update contents - "racli add credential"

Change password - "racli alter credential_wallet"

#2 Add keystore

racli add keystore --type hsm --restart_db

RecoveryAppliance/log/racli.log

Fri Jan 1 08:57:03 2018: Start: Configure Wallets

Fri Jan 1 08:57:04 2018: End: Configure Wallets

Fri Jan 1 08:57:04 2018: Start: Stop Listeners, and Database

Fri Jan 1 08:59:26 2018: End: Stop Listeners, and Database

Fri Jan 1 08:59:26 2018: Start: Start Listeners, and Database

Fri Jan 1 09:02:16 2018: End: Start Listeners, and Database

The second step to configure the ZDLRA to talk to OKV is to have the ZDLRA database be configured to communicate with OKV. The Database on the ZDLRA will be configured to use the OKV wallet for encryption keys which requires a bounce of the database.

Backout - "racli remove keystore"

Status - "racli status keystore"

Update - "racli alter keystore"

Disable - "racli disable keystore"

Enable - "racli enable keystore"

#3 Install okv_endpoint (OKV client software)

racli install okv_endpoint

23 20:14:40 2018: Start: Install OKV End Point [node01]

Wed August 23 20:14:43 2018: End: Install OKV End Point [node01]

Wed August 23 20:14:43 2018: Start: Install OKV End Point [node02]

Wed August 23 20:14:45 2018: End: Install OKV End Point [node02]

The third step to configure the ZDLRA to talk to OKV is to have the ZDLRA nodes (OKV endpoints) enrolled in OKV. This step will install the OKV software on both nodes of the ZDLRA, and complete the enrollment of the 2 ZDLRA nodes with OKV. The password that entered in step #1 for OKV is used during the enrollment process.

Status - "racli status okv_endpoint"

NOTE: At the end of this step, the status command should return a status of online from both nodes.

Node: node02

Endpoint: Online

Node: node01

Endpoint: Online

#4 Open the Keystore

racli enable keystore

The fourth step to configure the ZDLRA to talk to OKV is to have the ZDLRA nodes open the encryption wallet in the database. This step will use the saved passwords from step #1 and open up the encryption wallet.

NOTE: This will need to be executed after any restarts of the database on the ZDLRA.

#5 Create a TDE master key for the ZDLRA in the Keystore

racli alter keystore --initialize_key

The final step to configure the ZDLRA to talk to OKV is to have the ZDLRA create the master encryption for the ZDLRA in the wallet.

Creating Cloud Objects for Copy-to-Cloud

These steps create the cloud objects necessary to send backups to a cloud location.

NOTE: If you are configuring multiple cloud locations, you may go through these steps for each location.

Configure public/private key credentials

Authentication with the object storage is done using an X.509 certificate. The ZDLRA steps outlined in the documentation will generate a new pair of API signing keys and register the new set of keys.

You can also use any set of API keys that you previously generated by putting your private key in the shared location on the ZDLRA nodes..

In OCI each user can only have 3 sets of API keys, but the ZFSSA has no restrictions on the number of API signing keys that can be created.

Each "cloud_key" represents an API signing key pair, and each cloud_key contains

- pvt_key_path - Shared location on the ZDLRA where the private key is located

- fingerprint - fingerprint associated with the private key to identify which key to use.

You can use the same "cloud_key" to authenticate to multiple buckets, and even different cloud locations.

Documentation steps to create new key pair

#1 Add Cloud_key

racli add cloud_key --key_name=sample_key

Tue Jun 18 13:22:07 2019: Using log file /opt/oracle.RecoveryAppliance/log/racli.log

Tue Jun 18 13:22:07 2019: Start: Add Cloud Key sample_key

Tue Jun 18 13:22:08 2019: Start: Creating New Keys

Tue Jun 18 13:22:08 2019: Oracle Database Cloud Backup Module Install Tool, build 19.3.0.0.0DBBKPCSBP_2019-06-13

Tue Jun 18 13:22:08 2019: OCI API signing keys are created:

Tue Jun 18 13:22:08 2019: PRIVATE KEY --> /raacfs/raadmin/cloud/key/sample_key/oci_pvt

Tue Jun 18 13:22:08 2019: PUBLIC KEY --> /raacfs/raadmin/cloud/key/sample_key/oci_pub

Tue Jun 18 13:22:08 2019: Please upload the public key in the OCI console.

Tue Jun 18 13:22:08 2019: End: Creating New Keys

Tue Jun 18 13:22:09 2019: End: Add Cloud Key sample_key

This step is used to generate a new set of API signing keys,

The output of this step is a shared set of files on the ZLDRA which are stored in:

/raacfs/raadmin/cloud/key/{key_name)/

In order to complete the cloud_key information, you need to add the public key to OCI, or to the ZFS and save the fingerprint that is associated with the public key. The fingerprint is used in the next step.

#2 racli alter cloud_key

racli alter cloud_key

--key_name=sample_key

--fingerprint=12:34:56:78:90:ab:cd:ef:12:34:56:78:90:ab:cd:ef

The fingerprint that is associated with the public key (from the previous step) is added to the ZDLRA cloud_key information so that it can be used for authentication.

Both the private key, and the fingerprint are need to use the API signing key for credentials.

Using your own API signing key pair

#1 Add cloud_key

racli add cloud_key --key_name=KEY_NAME [--fingerprint=PUBFINGERPRINT --pvt_key_path=PVTKEYFILE]

You can add your own API signing keys to the ZDLRA by using the "add cloud_key" command identifying both the private key file location (it is best to follow the format and location in the automated steps) and the fingerprint associated with the API signing keys.

It is assumed that the public key has already been added to OCI, or to the ZFSSA.

Status - racli list cloud_key

Delete - racli remove cloud_key

Update - racli alter cloud_key

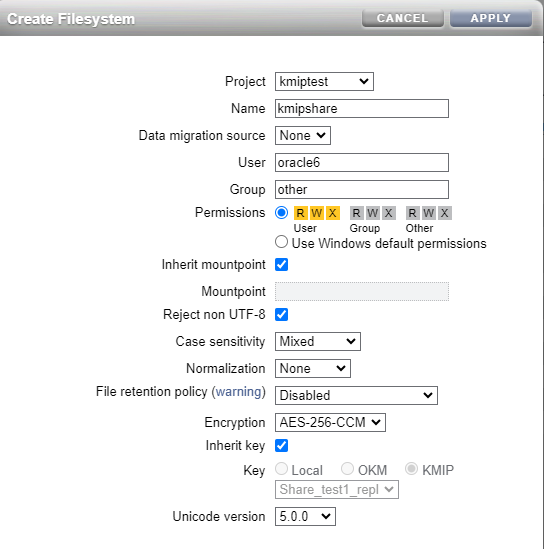

Documentation steps to create a new cloud_user

This step is used to create the wallet entry on the ZDLRA that is used for authenticating to the object store.

This step combines the "cloud_key", which contains the API signing keys, the user login information and the compartment (on ZFSSA the compartment is the share ).

The cloud_user can be used for authentication with multiple buckets/locations that are identified as cloud_locations as long as they are within the same compartment (share on ZFSSA).

The format of the command to create a new cloud_user is below

--user_ocid=ocid1.user.oc1..abcedfghijklmnopqrstuvwxyz0124567901

--tenancy_ocid=ocid1.tenancy.oc1..abcedfghijklmnopqrstuvwxyz0124567902

--compartment_ocid=ocid1.compartment.oc1..abcedfghijklmnopqrstuvwxyz0124567903

The parameters for this command are

- user_name - This is the username that is associated with the cloud_user to unique identify it.

- key_name - This is name of the "cloud_key" identifying the API signing keys to be used.

- user_ocid - This is the Username for authentication. In OCI this is the users OCID, in ZFS, this combines the ocid format with the username on the ZFSSA that owns the share.

- tenancy_ocid - this is the tenancy OCID in OCI, on ZFSSA it is ignored

- compartment_ocid - this is the OCID, on ZFSSA it is the share

For more information on configuring the ZFSSA see

How to configure Zero Data Loss Recovery Appliance to use ZFS OCI Object Storage as a cloud repository (Doc ID 2761114.1)

List - racli list cloud_user

Delete - racli remove cloud_user

Update - racli alter cloud_user

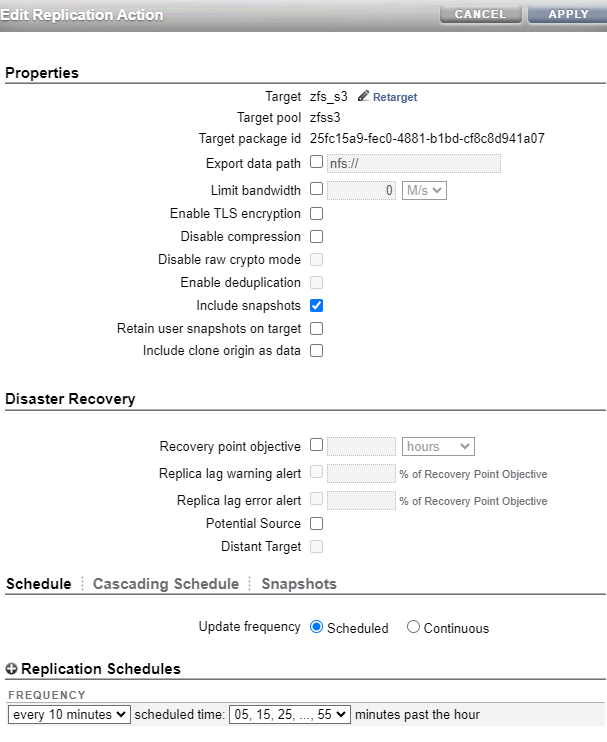

Documentation steps to create a new cloud_location

This step is used to associate the cloud_user (used for authentication) with both the location and the bucket that is going to be used for backups.

racli add cloud_location

--cloud_user=<CLOUD_USER_NAME>

--host=https://<OPC_STORAGE_LOCATION>

--bucket=<OCI_BUCKET_NAME>

--proxy_port=<HOST_PORT>

--proxy_host=<PROXY_URL>

--proxy_id=<PROXY_ID>

--proxy_pass=<PROXY_PASS>

--streams=<NUM_STREAMS>

[--enable_archive=TRUE]

--archive_after_backup=<number>:[YEARS | DAYS]

[--retain_after_restore=<number_hours>:HOURS]

--import_all_trustcert=<X509_CERT_PATH>

--immutable

--temp_metadata_bucket=<metadata_bucket>

I am going to go through the key items that need to be entered here. I am going to skip over the PROXY information and certificate.

- cloud_user - This is the object store authentication information that was created in the previous steps.

- host - This the URL for the object storage location. On ZFS the namespace in the URL is the "share"

- bucket - This is the bucket where the backups will be sent. The bucket will be created if it doesn't exists.

- streams - The maximum number of channels to use when sending backups to the cloud

- enable_archive - Not used with ZFS. With OCI the default TRUE allows you to set an archival strategy, FALSE will automatically put backups in archival storage.

- archive_after_restore - Not used with ZFS. Automatically configures an archival strategy in OCI

- retain_after_restore - Not used with ZFS. Sets the period of time that backups will remain in standard storage before returning to archival storage.

- immutable - This allows you to set retention rules on the bucket by using the <metadata_bucket> for temporary files that need to be deleted after the backup. When using immutable you must also have a temp_metadata_bucket

- temp_metadata_bucket - This is used with immutable to configure backups to go to 2 buckets, and this bucket will only contain a temporary object that gets deleted after the backup completes.

This command will create multiple attribute sets (between 1 and the number of streams) for the cloud_location that can be used for sending archival backups to the cloud with different numbers of channels.

The format of <copy_cloud_name> is a combination of <bucket name> and <cloud_user>.

The format of the attributes used for the copy jobs is <Cloud_location_name>_<stream number>

Update - racli alter cloud_location

Disable - racli disable cloud_location - This will pause all backups going to this location

Enable - racli enable cloud_location - This unpauses all backups going to this location

List - racli list cloud_location

Delete - racli remove cloud_location

NOTE: There are quite a few items to note in this section.

- When configuring backups to go to ZFSSA use the documentation previously mentioned to ensure the parameters are correct.

- When executing this step with ZFSSA, make sure that the default OCI location on the ZFSSA is set to the share that you are currently configuring. If you are using multiple shares for buckets, then you will have to change the ZFSSA settings as you add cloud locations.

- When using OCI for archival ensure that you configure the archival rules using this command. This ensures that the metadata objects, which can't be archived are excluded as part of the lifecycle management rules created during this step.

Create the job template using the documentation.