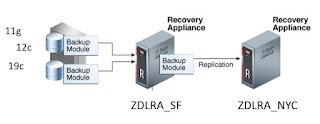

One of the topics that comes when implementing ZDLRA is the new RMAN feature that allows multiple users to share an RMAN catalog, but still be isolated.

This is NOT a ZDLRA specific feature, but I find that most customers have never used it until they move to a shared BaaS (Backup as a Service) environment like ZDLRA.

Here is the basic explanation. I am a DBA in the ACME corporation. The ACME corporation has 2 divisions.

The white division and the black division.

I work for the black division of ACME.

Since the ACME corporation has a shared infrastructure, there is a single ZDLRA and thus a single RMAN catalog for all database backups.

As you can guess my division (black) wants to be isolated from the white division. We don't want the white division to be able to access the backups for any database that my division supports. The White division feel the same way about my division.

Now this where the idea of VPC users in the RMAN catalog comes in. First

here is some documentation on it to do your own reading.

For my example I have 2 databases.

- OEMDB (White division maintained)

- RMAN19C (Black division maintained)

The ZDLRA already has a catalog created, and in order to manage VPC users, you need to use the Command Line Interface (racli).

With the naming, on the ZDLRA the users are referred to as VPC (Virtual Private Catalog users). The documentation for this feature refers to it as a VPD (Virtual Private Database) model.

In order to demonstrate how this works, I am going to walk through the steps with just RMAN.

Step # 1 - Create an RMAN user in a database to own the RMAN catalog.

SQL> create user rman identified by oracle

temporary tablespace temp

default tablespace rman_data

quota unlimited on rman_data;

grant recovery_catalog_owner to rman;

2 3 4

User created.

SQL> SQL>

Grant succeeded.

Step #2 - create the catalog for the RMAN user.

[oracle@oracle-server admin]$ rman target / catalog rman/oracle

Recovery Manager: Release 19.0.0.0.0 - Production on Wed May 13 08:10:23 2020

Version 19.6.0.0.0

Copyright (c) 1982, 2019, Oracle and/or its affiliates. All rights reserved.

connected to target database: OEMDB (DBID=700723428)

connected to recovery catalog database

RMAN> create catalog tablespace rman_data;

recovery catalog created

RMAN>

Step #3 - Enable the VPD model within the recovery catalog. The VPD model is not available by default.

SQL> @/$ORACLE_HOME/rdbms/admin/dbmsrmanvpc.sql -vpd rman

Checking the operating user... Passed

Granting VPD privileges to the owner of the base catalog schema RMAN

========================================

VPD SETUP STATUS:

VPD privileges granted successfully!

Connect to RMAN base catalog and perform UPGRADE CATALOG.

Disconnected from Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.6.0.0.0

Step #4 - Upgrade the RMAN catalog.

I didn't notice this at first, but the last message at the end of the script indicates that you need to execute UPGRADE CATALOG.

-vpd command grants required privileges to support VPD protected catalog.

Connect to RMAN base catalog and perform UPGRADE CATALOG after the VPD

privileges are granted.

After UPGRADE CATALOG is performed for the base catalog schemas a cleanup

of VPC schemas has to take place for that the RMAN base catalog schema

names have to be supplied as command line parameters. Up to 10 schema

names can be supplied per script execution. When -all is specified the

script attempts to detect the RMAN base catalog schemas automatically

and perform the upgrade.

Now to upgrade the catalog.

[oracle@oracle-server admin]$ rman catalog rman/oracle

Recovery Manager: Release 19.0.0.0.0 - Production on Wed May 13 08:22:18 2020

Version 19.6.0.0.0

Copyright (c) 1982, 2019, Oracle and/or its affiliates. All rights reserved.

connected to recovery catalog database

RMAN> upgrade catalog;

recovery catalog owner is RMAN

enter UPGRADE CATALOG command again to confirm catalog upgrade

RMAN> upgrade catalog;

recovery catalog upgraded to version 19.06.00.00.00

DBMS_RCVMAN package upgraded to version 19.06.00.00

DBMS_RCVCAT package upgraded to version 19.06.00.00.

Step #5 - Create my 2 VPC users within my RMAN catalog which is now VPD enabled.

SQL>

create user black identified by oracle

temporary tablespace temp;

grant create session to black;

create user white identified by oracle

temporary tablespace temp;

grant create session to white;

SQL> 2

User created.

SQL> SQL>

Grant succeeded.

SQL> SQL> 2

User created.

SQL> SQL>

Grant succeeded.

Now I have 2 choices for registering my 2 databases.

- I can reserve the "REGISTER DATABASE" for the catalog owner (RMAN) only

- I can grant VPC users the authority to register their own databases

Step #5 - I have decided to grant the VPC users the ability to register databases themselves.

[oracle@oracle-server ~]$ rman catalog rman/oracle

Recovery Manager: Release 19.0.0.0.0 - Production on Wed May 13 08:25:29 2020

Version 19.6.0.0.0

Copyright (c) 1982, 2019, Oracle and/or its affiliates. All rights reserved.

connected to recovery catalog database

RMAN> grant register database to black;

grant register database to white;

Grant succeeded.

RMAN>

Grant succeeded.

NOTE : When I first ran the commands I received the error below.

RMAN-07543: recovery catalog does not have VPD support enabled

f you see this error, it is most likely because you didn't execute the" UPGRADE CATALOG" after enabling the VPD model.

Step #6 - Now lets register the databases with separate VPC users and backup a datafile.

I am going to register database OEMDB as "white" vpc user and backup datafile 1;

[oracle@oracle-server ~]$ rman target / catalog white/oracle@zdlra_sf

Recovery Manager: Release 19.0.0.0.0 - Production on Wed May 13 08:39:57 2020

Version 19.6.0.0.0

Copyright (c) 1982, 2019, Oracle and/or its affiliates. All rights reserved.

connected to target database: OEMDB (DBID=700723428)

connected to recovery catalog database

RMAN> register database;

database registered in recovery catalog

starting full resync of recovery catalog

full resync complete

RMAN> backup incremental level 0 datafile 1;

Starting backup at 05/13/20 08:40:29

allocated channel: ORA_DISK_1

channel ORA_DISK_1: SID=398 device type=DISK

channel ORA_DISK_1: starting incremental level 0 datafile backup set

channel ORA_DISK_1: specifying datafile(s) in backup set

input datafile file number=00001 name=/home/oracle/app/oracle/oradata/OEMDB/datafile/o1_mf_system_h4llc7kt_.dbf

channel ORA_DISK_1: starting piece 1 at 05/13/20 08:40:30

channel ORA_DISK_1: finished piece 1 at 05/13/20 08:41:15

piece handle=/home/oracle/app/oracle/fast_recovery_area/OEMDB/backupset/2020_05_13/o1_mf_nnnd0_TAG20200513T084030_hcqtoz3z_.bkp tag=TAG20200513T084030 comment=NONE

channel ORA_DISK_1: backup set complete, elapsed time: 00:00:45

Finished backup at 05/13/20 08:41:15

Starting Control File and SPFILE Autobackup at 05/13/20 08:41:15

piece handle=/home/oracle/app/oracle/fast_recovery_area/OEMDB/autobackup/2020_05_13/o1_mf_s_1040287283_hcqtqnbm_.bkp comment=NONE

Finished Control File and SPFILE Autobackup at 05/13/20 08:41:26

I am going to register database RMAN19C as "black" vpc user and backup datafile 1;

[oracle@oracle-server ~]$ rman target / catalog black/oracle@zdlra_sf

Recovery Manager: Release 19.0.0.0.0 - Production on Wed May 13 08:42:49 2020

Version 19.6.0.0.0

Copyright (c) 1982, 2019, Oracle and/or its affiliates. All rights reserved.

connected to target database: RMAN19C (DBID=4290218304)

connected to recovery catalog database

RMAN> register database;

database registered in recovery catalog

starting full resync of recovery catalog

full resync complete

RMAN> backup incremental level 0 datafile 1;

Starting backup at 05/13/20 08:43:12

allocated channel: ORA_DISK_1

channel ORA_DISK_1: SID=274 device type=DISK

channel ORA_DISK_1: starting incremental level 0 datafile backup set

channel ORA_DISK_1: specifying datafile(s) in backup set

input datafile file number=00001 name=/home/oracle/app/oracle/oradata/RMAN19C/datafile/system.dbf

channel ORA_DISK_1: starting piece 1 at 05/13/20 08:43:13

channel ORA_DISK_1: finished piece 1 at 05/13/20 08:44:08

piece handle=/home/oracle/app/oracle/fast_recovery_area/RMAN19C/backupset/2020_05_13/o1_mf_nnnd0_TAG20200513T084313_hcqtv1fc_.bkp tag=TAG20200513T084313 comment=NONE

channel ORA_DISK_1: backup set complete, elapsed time: 00:00:55

Finished backup at 05/13/20 08:44:08

Starting Control File and SPFILE Autobackup at 05/13/20 08:44:08

piece handle=/home/oracle/app/oracle/fast_recovery_area/RMAN19C/autobackup/2020_05_13/o1_mf_s_1040287449_hcqtwtmj_.bkp comment=NONE

Finished Control File and SPFILE Autobackup at 05/13/20 08:44:12

Step #7 - Now lets see what happens when I try to cross VPC users within the catalog.

NOTE -below are the 2 databases and DBIDs. I will try to access opposite database to restore it from RMAN using the DBID.

white VPC user ----> OEMDB (DBID=700723428)

black VPC user ----> RMAN19C (DBID=4290218304)

First lets connect as "white" to a new instance and try to set the dbid for each of the databases. You can see that "white" can only see the metadata for the OEMDB.

[oracle@oracle-server admin]$ rman target / catalog white/oracle@zdlra_sf

Recovery Manager: Release 19.0.0.0.0 - Production on Wed May 13 08:50:39 2020

Version 19.6.0.0.0

Copyright (c) 1982, 2019, Oracle and/or its affiliates. All rights reserved.

connected to target database (not started)

connected to recovery catalog database

RMAN> set dbid=4290218304;

executing command: SET DBID

RMAN-00571: ===========================================================

RMAN-00569: =============== ERROR MESSAGE STACK FOLLOWS ===============

RMAN-00571: ===========================================================

RMAN-03002: failure of set command at 05/13/2020 08:51:15

RMAN-06063: DBID is not found in the recovery catalog

RMAN> set dbid=700723428;

executing command: SET DBID

database name is "OEMDB" and DBID is 700723428

RMAN>

Now lets connect as "black" to a new instance and try to set the dbid for each of the databases. You can see that "black" can only see the metadata for the RMAN19C.

[oracle@oracle-server admin]$ rman target / catalog black/oracle@zdlra_sf

Recovery Manager: Release 19.0.0.0.0 - Production on Wed May 13 08:54:20 2020

Version 19.6.0.0.0

Copyright (c) 1982, 2019, Oracle and/or its affiliates. All rights reserved.

connected to target database (not started)

connected to recovery catalog database

RMAN> set dbid=4290218304;

set dbid=700723428;

executing command: SET DBID

database name is "RMAN19C" and DBID is 4290218304

RMAN>

executing command: SET DBID

RMAN-00571: ===========================================================

RMAN-00569: =============== ERROR MESSAGE STACK FOLLOWS ===============

RMAN-00571: ===========================================================

RMAN-03002: failure of set command at 05/13/2020 08:55:01

RMAN-06063: DBID is not found in the recovery catalog

CONCLUSION : Implementing the VPD model in an RMAN catalog allows you to share a single RMAN catalog while still allowing backup metadata to be isolated within same catalog.

This feature is leveraged by the ZDLRA to allow a single ZDLRA to be shared across a corporation, but still allow isolation by internal organizations.

Final Note : As Multi-tenant becomes the standard, support for isolation will start to be at the PDB level also. This will allow for different VPC users to manage PDBs within the same CPD. This is dependent on the version of RMAN/DB/ZDLRA.