One new item I learned with RMAN is the ability to utilize shared scripts. Using shared scripts can be very useful as you standardize your environment.

This is where it fits into the ZDLRA. The ZDLRA is an awesome product that helps you standardize your Oracle Backups, so adding shared scripts to your environment makes it even better !!

First, a little bit on shared scripts if you are new to them (I was).

Shared scripts are exactly as you expect by the name. They are a way to store a script in your RMAN catalog that can be shared by all the databases that utilize that RMAN catalog.

The list of commands to be used for shared scripts are.

- [CREATE]/[CREATE OR REPLACE] {GLOBAL} SCRIPT

- DELETE {GLOBAL} SCRIPT

- EXECUTE {GLOBAL} SCRIPT

- PRINT {GLOBAL} SCRIPT

Using the {GLOBAL} keyword is optional, and is only useful if you are are using multiple VPD users in your RMAN catalog. In a multi-VPD RMAN catalog, each VPD users can store scripts with the same name and they will be separate.

I will go through VPD users in a future post, as this is a topic on it's own.

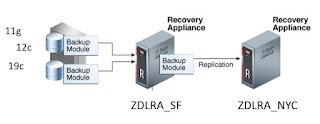

Now let's go through how to best utilize stored scripts through an example. In my example, I have 2 ZDLRAs (let's pretend). With ZDLRA, the RMAN catalog is contained with the ZDLRA itself. This means that because I have 2 ZDLRAs, I have 2 RMAN catalogs. In this example, I will show how I can use a shared script to automatically switch backups to a different ZDLRA when the primary ZDLRA is not available during a patching window. Below is the picture of my fictitious environment.

In this case my backups go to ZDLRA_SF, and they are replicated to ZDLRA_NYC. My database is registered in both RMAN catalogs, but I only connect to the catalog on ZDLRA_SF if it is available.

Now let's create a script to put in each catalog that is specific to each ZDLRA.

Step #1 - add a script to both ZDLRAs that creates the channel configuration.

This is the script for the SF ZDLRA.

create or replace script ZDLRA_INCREMENTAL_BACKUP_L1

COMMENT "Normal level 1 incremental backups for San Francisco Primary ZDLRA"

{

###

### Script to backup incremental level 1 to Primary San Francisco ZDLRA

###

###

### First configure the channel for San Francisco ZDLRA

###

CONFIGURE CHANNEL DEVICE TYPE 'SBT_TAPE' FORMAT '%d_%U' PARMS

"SBT_LIBRARY=/u01/app/oracle/lib/libra.so,

ENV=(RA_WALLET='location=file:/u01/app/oracle/wallet

credential_alias=zdlrasf-scan:1521/zdlra:dedicated')";

###

### Perform backup command

###

backup device type sbt cumulative incremental level 1 filesperset 1 section size 64g database plus archivelog filesperset 32 not backed up;

}

created script ZDLRA_INCREMENTAL_BACKUP_L1

This is the script for the NYC ZDLRA

create or replace script ZDLRA_INCREMENTAL_BACKUP_L1

COMMENT "Normal level 1 incremental backups for New York Primary ZDLRA"

{

###

### Script to backup incremental level 1 to Primary New York ZDLRA

###

###

### First configure the channel for New York ZDLRA

###

CONFIGURE CHANNEL DEVICE TYPE 'SBT_TAPE' FORMAT '%d_%U' PARMS

"SBT_LIBRARY=/u01/app/oracle/lib/libra.so,

ENV=(RA_WALLET='location=file:/u01/app/oracle/wallet

credential_alias=zdlranyc-scan:1521/zdlra:dedicated')";

###

### Perform backup command

###

backup device type sbt cumulative incremental level 1 filesperset 1 section size 64g database plus archivelog filesperset 32 not backed up;

}

created script ZDLRA_INCREMENTAL_BACKUP_L1

Now, I have a script in each ZDLRA which allocates the channel properly for each ZDLRA.

Step #2 - Ensure the connection to the RMAN fails over if primary ZDLRA is not available.

The best way to accomplish this is the GDS - Global Data Services. In order to perform my test I am going to set up a failover in my tnsnames.ora file. Below is the entry in my file.

ZDLRA_SF=

(DESCRIPTION_LIST=(FAILOVER=YES)(LOAD_BALANCE= NO)

(DESCRIPTION=(FAILOVER= NO)

(CONNECT_DATA=(SERVICE_NAME=ZDLRA_SF))

(ADDRESS=(PROTOCOL=TCP)(HOST=localhost)(PORT=1522))

)

(DESCRIPTION=(FAILOVER= NO)

(CONNECT_DATA=(SERVICE_NAME=ZDLRA_NYC))

(ADDRESS=(PROTOCOL=TCP)(HOST=localhost)(PORT=1522))

)

)

Step #3 - Let's put it all together.

Since I don't have 2 ZDLRAs to test this, I will test it all by printing out the script that will be executed in RMAN.

I am going first going to show what happens under normal conditions. I am going to execute the following script I created in /tmp/rman.sql

print script ZDLRA_INCREMENTAL_BACKUP_L1;

exit;

I am going run that script over and over to ensure I keep connecting properly. Below is the command.

rman target / catalog rman/oracle@zdlra_sf @/tmp/rman.sql

Here is the output I get everytime showing that I am connecting and I will allocate channels to ZDLRA_SF

RMAN> print script ZDLRA_INCREMENTAL_BACKUP_L1;

2> exit;

printing stored script: ZDLRA_INCREMENTAL_BACKUP_L1

{

###

### Script to backup incremental level 1 to Primary San Francisco ZDLRA

###

###

### First configure the channel for San Francisco ZDLRA

###

CONFIGURE CHANNEL DEVICE TYPE 'SBT_TAPE' FORMAT '%d_%U' PARMS

"SBT_LIBRARY=/u01/app/oracle/lib/libra.so,

ENV=(RA_WALLET='location=file:/u01/app/oracle/wallet

credential_alias=zdlrasf-scan:1521/zdlra:dedicated')";

###

### Perform backup command

###

backup device type sbt cumulative incremental level 1 filesperset 1 section size 64g database plus archivelog filesperset 32 not backed up;

}

Everything is working fine. Now let's simulate the primary being unavailable like below.

I shutdown the ZDLRA_SF database.

Now I am executing the same command as before.

rman target / catalog rman/oracle@zdlra_sf @/tmp/rman.sql

My output now shows that it is using my New York ZDLRA.

RMAN> print script ZDLRA_INCREMENTAL_BACKUP_L1;

2> exit;

printing stored script: ZDLRA_INCREMENTAL_BACKUP_L1

{

###

### Script to backup incremental level 1 to Primary New York ZDLRA

###

###

### First configure the channel for New York ZDLRA

###

CONFIGURE CHANNEL DEVICE TYPE 'SBT_TAPE' FORMAT '%d_%U' PARMS

"SBT_LIBRARY=/u01/app/oracle/lib/libra.so,

ENV=(RA_WALLET='location=file:/u01/app/oracle/wallet

credential_alias=zdlranyc-scan:1521/zdlra:dedicated')";

###

### Perform backup command

###

backup device type sbt cumulative incremental level 1 filesperset 1 section size 64g database plus archivelog filesperset 32 not backed up;

}

Recovery Manager complete.