Here is a link to the latest version of it.

As you probably know, MAA is described as "best practices" for implementing the Oracle Database.

What I am going to explain in this blog post, is why you need the different pieces.

Questions often comes up around why each piece of availability (for lack of a better word) is important.

These pieces include

- Data Guard

- Active Data Guard

- Flashback database

- Point-in-time backups

- Archive Backups

- DR site

Data Guard

Well let's start with the first piece Data Guard. I'm not going address Data Guard in the same datacenter, just Data Guard between datacenters.

There is a difference between "just Data Guard" and "Active Data Guard"

Data Guard - Mounted copy of the primary database that is constantly applying redo changes. It can be thought of as always in recovery. This copy can be gracefully "switched" to without data loss (all transactions are applied before opening). In the event of an emergency, it can be "failed" to which allows for opening it without applying all transactions.

Active Data Guard - A Data Guard copy of the data (like above), BUT this is a read-only copy of the primary database. Note this is an extra cost option requiring a license. Having the database open read-only has many advantages.

- Reporting, and read-only workload can be sent to the Data Guard copy of the database taking advantage of the resources available that would typically sit idle.

- Automatic block repair from the primary database.

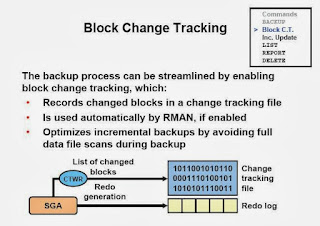

- A Block Change Tracking can be used to speed up incremental backups.

Data Guard, regardless of the 2 types is often between datacenters with limited bandwidth.

This becomes important for a few reasons

- Cloning across datacenters, or even rebuilding a copy of the database in the opposite datacenter can take a long time. Even days for a very large database.

- The application tier that accesses the database needs to move with the database. In the event of a switchover/failover of the database, the application must also switchover.

NOTE : Making your Data Guard database your primary database involves moving the application tier to your DR data center. The testing and changes to move the application may be risky and involve more time than simply restoring the database.

Flashback Database

Flashback database allows you to query the database as of a previous point-in-time, and even flashback the entire database to a previous point-in-time.

You can also use flashback database to "recover" a table that was accidentally dropped.

You can also use flashback database to "recover" a table that was accidentally dropped.

With flashback database, the changes are kept in the FRA for a period of time, typically only a few hours or less depending on workload.

Flashback can be helpful in a few situations.

- Logical corruption of a subset of data. If a logical corruption occurs, like data is accidentally deleted, or incorrectly updated, you can view the data as it appeared before the corruption occurred. This of course, is assuming that the corruption is found within the flashback window. To correct corruption, the pre-corrupt data can be selected from the database and saved to a file. The application team familiar with the data, can then create scripts to surgically correct the data.

- Logical corruption of much of data. If much of the data is corrupted you can flashback the database to a point-in-time prior to the corruption within the flashback window. This a FULL flashback of ALL data. After the flashback occurs, a resetlogs is performed creating a new incarnation of the database. This can be useful if an application release causes havoc with the data, and it is better to suffer data loss, than deal with the corruption.

- Iterative testing. Flashback database can be a great tool when testing, to repeat the same test over and over. Typically a restore point is created before beginning a test, and the database is flashed back to that state. This allows an application team to be assured that the data is in the same state at the beginning of each iterative test.

Backups

Backups allow you to restore the database to a point-in-time prior to the current point-in-time. ZDLRA is the best product to use for backups.

ZDLRA offers

The most recent backups are kept local to the database to ensure quick restore time. here are typically 2 types of backups.

ZDLRA offers

- Near Zero RPO

- Very low RTO by using the proprietary incremental forever strategy. Incremental forever reduces recovery time, by creating a full restore point for each incremental backup taken.

Archive backups - These are often "keep" backups. They are special because they are a self contained backupset that allows you recovery only to a specific prior point-in-time. An example would be the creation of an archival backup on the last day of the month, that allows you recover only to the end of the backup time. A single recovery point for each month rather than any point during the month.

Point-in-time backups - These are backups that include the archive logs to recovery to any point-in-time. Typically these types of backups allow you to recover to any point in time from the beginning to the current point in time. For example, allow you to recover to any point in the last 30 days.

The advantages of backups are

- You can recover to any point-in-time within the recovery window. This window is much longer than flashback database can provide. Any logical corruptions can be corrected by restoring portions of "good" data, or the whole database.

- You can restore the database within the same data center returning the database to availability without affecting the application tier.

- Backups are very important if using active Data Guard. The issue may be with the read-only copy of the database. Since both the primary and the Data Guard copy are used by the application, restoring from a backup is the quickest method to rebuild either environment.

Disaster Recovery

Disaster Recovery is typically a documented, structured approach to dealing with unplanned incidents. These incidents include planning for a complete site failure involving the loss of all data centers within a geographical area.

The 2 major risks areas addressed by any approach are

RTO - Recovery Time Objective. How long does it take to return the application to full availability

RPO - Recovery Point Objective. How much data loss can be tolerated in the event of a disaster

Comparison

The bottom line when comparing the different technologies

Flashback database - Correct logical corruption that is immediately found.

Dataguard - Full disaster requiring moving the full environment. Keep application continuity in the event of a disaster.

Backups - Restore current environment to availability (production site or Data Guard site).

ZDLRA, by offering a very low RTO and RPO provides a solution that can be used to return availability in all but a datacenter disaster.